← Unity APP

← Xvisio SDK Documentation Home Page

Mixing of Virtual and Actual Reality

1. Demo

RGB.unity

In the practical application of AR glasses, sometimes we need to fuse the 3D scene seen in the glasses with the scene in the rgb camera, and then output it to the LED screen for display. The virtual and real fusion SDK of AR glasses is used to achieve this goal.

2. Development Tutorial

As below, take Gesture Demo as an example.

Step 1:

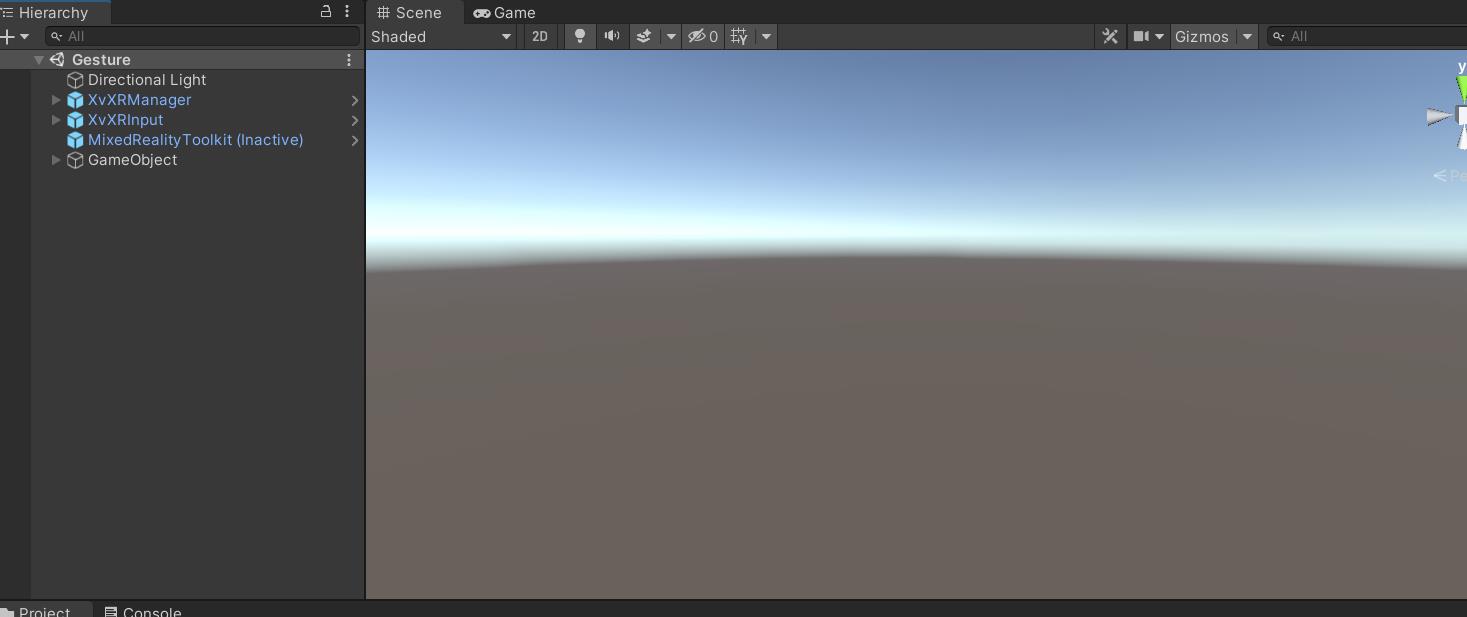

Start gesture demo: Gesture

Step 2:

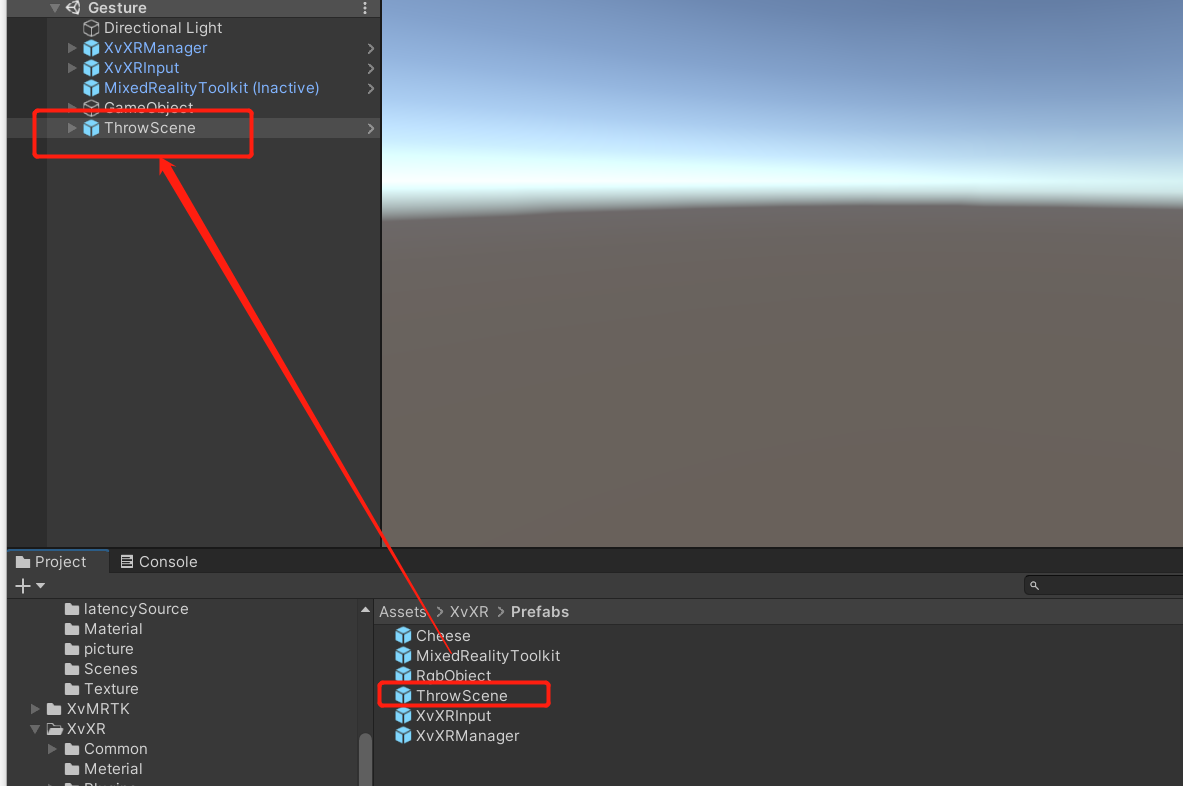

Drag "ThrowScene" in "XvXR/Prefabs" to the scene. Now the Mixing of Virtual and Actual Reality function has been merged into the local scene.

Pack the APK to see the virtual and real fusion image displayed on the mobile phone screen. Now the virtual and real fusion image can be projected onto the TV and other devices by using mobile phone projection function.